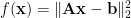

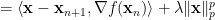

In our recent paper [2], we investigate the lp-l2 problem with  . Our algorithms are built on a recent algorithm known as the MFISTA [1], where a key step of soft shrinkage is replaced by a global solver for the minimization of a 1-D nonconvex problem.

. Our algorithms are built on a recent algorithm known as the MFISTA [1], where a key step of soft shrinkage is replaced by a global solver for the minimization of a 1-D nonconvex problem.

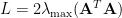

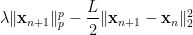

It has been proved in [1] that for the l1-l2 problem, ISTA has a global convergence rate of  , and FISTA shares an improved complexity result of

, and FISTA shares an improved complexity result of  . We naturally explore whether a similar convergence result exists for our algorithm reported in [2]. The lp-l2 problem considered is as follows

. We naturally explore whether a similar convergence result exists for our algorithm reported in [2]. The lp-l2 problem considered is as follows

.

.

Let  and

and  . The Lipschitz constant of the gradient

. The Lipschitz constant of the gradient  is

is  . It follows that

. It follows that

.

.

Thus,

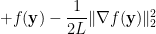

It can be derived that

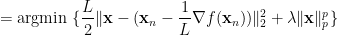

Suppose the current iterate is  , and the next iterate is

, and the next iterate is  . By virtue of ISTA or FISTA (see [1]),

. By virtue of ISTA or FISTA (see [1]),

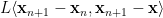

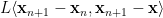

It is not hard to derive that

-

-

Therefore, if we can prove

,

,

Then the following inequality holds

which essentially fits into the result of Lemma 2.3 in [1]. And if the inequality above holds, then the convergence proof for the lp-l2 problem follows in a same way as that of ISTA or FISTA for the l1-l2 problem.

At this point, the current problem is whether we can prove

.

.

It can be calculated that this inequality holds if and only if

[1] A. Beck and M. Teboulle, “A fast iterative shrinkage-thresholding algorithm for linear inverse problems,” SIAM Journal on Imaging Sciences, vol. 2, no. 1, pp. 183-202, 2009.

[2] J. Yan and W.-S. Lu, “New algorithms for sparse representation of discrete signals based on lp-l2 optimization,” submitted to PacRim 2011.

,

is reached. Simply speaking, our algorithm assures that the next iterate will still be

.

and

. Define

, and

, then

,

.

.

,

.

,

.

.

.